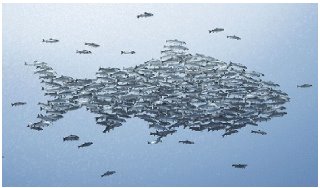

Democracy is most often thought of as in opposition to totalitarianism. In this case I mean democracy in opposition to anarchy. Some people are raising their voices against the trend of collective intelligence/wisdom of the crows that have been the hype of the net for the past few years. Wikipedia is the crown jewel of this trend of empowering people for the common good and probably as a result of the project's visibility it has been the one to take the heat from the backlash.

Is Wikipedia dead like Nicholas Carr suggests in his blog ? His provocative title was a flame bait but he does call attention to some interesting things happening at Wikipedia. The wikipedia is not dead , it is just changing. It has to change to cope with the increase in visibility, vandalism and to deal with situations where no real consensus is possible.

The system is evolving by restricting anonymous posting and allowing editors to apply temporary editing restrictions to some pages. It is evolving to become more bureaucratic in nature with disputes and mechanisms to deal with the discord. What Nicholas Carr said is dead is the ideal that anyone can edit anything in wikipedia and I would say this is actually good news.

Following his post on the death of Wikipedia, Carr points to an assay by Jaron Lanier entitled Digital Maoism. It is a bit long but I highly recommend it.

Some quotes from the text:

"Every authentic example of collective intelligence that I am aware of also shows how that collective was guided or inspired by well-meaning individuals. These people focused the collective and in some cases also corrected for some of the common hive mind failure modes. The balancing of influence between people and collectives is the heart of the design of democracies, scientific communities, and many other long-standing projects. "

Sites like Wikipedia are important online experiments. They are trying to develop the tools that allow useful work to come out from millions of very small contributions. I think this will have to go trough some representative democracy systems. We still have to work on ways to establish the governing body in these internet systems. Essentially to whom we decide to deposit trust for that particular task or realm of knowledge. For this we will need better ways to define identity online and to establish trust relationships.

Further reading:

Wiki-truth

Tags: