There is a paper out in PNAS about the distribution of free energy of binding for the yeast-two-hybrid datasets. Although I still have to dig into the model they used I found the result quite interesting. They observe that the average binding energy decreases with cellular complexity.

They have some sentences in there that made my hairs stand like: "more evolved organisms have weaker binary protein-protein binding". What does "more evolved" mean ? Also on figure 4 of the paper they plot miu (a parameter related to the average binding energy) over divergence times without saying what species they are comparing.

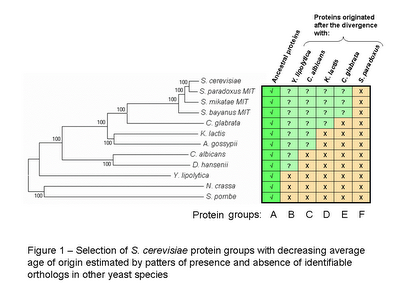

This result fits well with another paper published a while ago in PLoS Comp Bio about protein family expansions and complexity. Christine Vogel and Cyrus Chothia show (among other things) what protein domains expansion best correlate with complexity. They used cell numbers as a proxy for species complexity. If you look at the top of the list (in table 2) you can find several of the peptide binding domains, know to be of low specificity, given that they do not require a folded structure to interact with.

What I would like to know is the correlation between binding affinity and binding specificity. For example SH2 domains bind much more tightly than SH3 domains although they are both not very specific binding domains. Maybe in general it could be said that average lower binding affinities correspond to lower average binding specificity.

Why would complexity correlate with binding specificity ? I think one important factor is cellular size. An increase is size has allowed for exploration of spacial factors in determining cellular response. Specificity of binding in the real cell (not in binary assays) is determined also by localization at sub cellular structures.

One practical reminder coming from this is that even if we have the perfect method to determine biophysical binding specificity we are still going to get poor results if we cannot predict all other components that will determine if the two proteins will bind or not (i.e localization, expression).

Tags: