The peer review trial

The next day after finding about

PLoS One I saw the

announcement for the Nature peer review trial. For the next couple of months any author submitting to Nature can opt to go trough a parallel process of open peer review. Nature is also promoting the discussion on the issue online

in a forum where anyone can comment. You can also track the discussion going on the web through Connotea under the tag of "

peer review trial", or under the "

peer review" tag in Postgenomic.

I really enjoyed reading this opinion on "

Rethinking Scholarly Communication", summarized

in one of the Nature articles. Briefly, the authors first describe (from Roosendaal and Geurts) the required functions any system of scholarly communication:

*

Registration, which allows claims of precedence for a scholarly finding.

*

Certification, which establishes the validity of a registered scholarly claim.

*

Awareness, which allows actors in the scholarly system to remain aware of new claims and findings.

*

Archiving, which preserves the scholarly record over time.

*

Rewarding, which rewards actors for their performance in the communication system based on metrics derived from that system.

The authors then try to show that it is possible to build a science communication system where all these functions are not centered in the journal, but are separated in different entities.

This would speed up science communication. There is a significant delay between submitting a communication and having it accessible to others because all the functions are centered in the journals and only after the certification (peer reviewing) is the work made available.

Separating the registration from the certification also has the potential benefit of exploring parallel certifications. The manuscripts deposited in the pre-print servers can be evaluated by the traditional peer-review process in journals but on top of this there is also the possibility of exploring other ways of certifying the work presented. The authors give the example of

Citabase but also blog aggregation sites like

Postgenomic could provide independent measures of the interest of a communication.

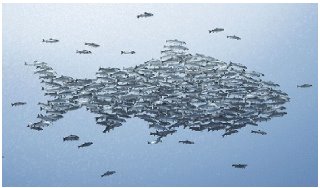

More generally and maybe going a bit of-topic, this reminded me of the correlation between

modularity and complexity in biology. By dividing a process into separate and independent modules you allow for exploration of novelty without compromising the system. The process is still free to go from start to end in the traditional way but new subsystems can be created to compete with some of modules.

For me this discussion, is relevant for the whole scientific process , not just communication. New web technologies lower the costs of establishing collaborations and should therefore ease the recruitment of resources required to tackle a problem. Because people are better at different task it does make some sense to increase the modularity in the scientific process.