Social network dynamics in a conference setting

(disclaimer: This was not peer reviewed and is not serious at all :)

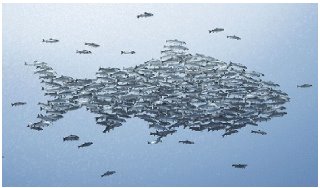

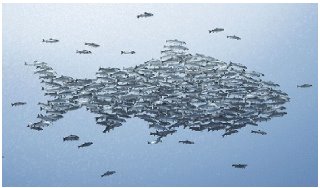

To study the dynamics in social network topology we decided evaluate how some nodes (also called humans) interact in defined experimental conditions. We used the scientific meeting setting that we think can serve as a model for this type of studies. We observed human-human interactions during the meeting breaks by taking snapshots and calculating inter-human distances. We defined an arbitrary cut-off to determine the binary interactions between all the humans present in the study.

The first analysis we preformed was under the so call "conference breaks" model where our nodes are allowed to interact for brief time intervals after being subjected lengthy lectures.

We observed an interesting clustered network topology that can be described with a power law distribution. Most nodes in the network have few interactions while a small fractions of humans was found to consistently interact with a large number of other nodes. We found also some nodes that did not show any interactions in our studies even when several "conference breaks" were preformed. We believe that these could be pseudo-humans that were included in our study by mistake. These pseudo-humans might be on the way to extinction from the humeone.

Having built this network of human-human interaction on a large scale we decided to investigate what human properties might be correlated with human hubs. We used previous large-scale studies of human properties like height, gender and number of papers published to test this.

We show here that although gender shows a significant correlation with human hubness, the best predictor for hubs in the conference breaks networks is actually number of papers published. We tried to refine this further by introducing a new human measurement we call "hypeness". Hypeness of a human was calculated as a modification of the number of papers published weighted by the impact factor of the journals where the papers were published and also the number of times cited in popular media articles. We show here that hypeness does significant better at predicting hub nodes in this network.

Given that networks are dynamic we set out to map the changes in network structure with time. To simulate this we perturbed the gathering using a small-compound (EtOH) that we administered in liquid form. With time we observed a noticeable change in the network. Although the overall topological properties were maintained, the nature of the hubs changed dramatically. In this new network state that we call the "drunk" state, the best predictor for the highly connected hubs is clearly gender. We believe this clearly proves that social networks in conference settings are very dynamic with time.

To prove that gender was indeed the best indicator of hubness and not some strange artifact we used deletions studies. Random female nodes where struck with a sudden case of "sleepiness" and the perturbed network was observed. We show here that random female deletion leads to a rapid collapse of the network. The same is not observed with random deletion of the hypest nodes, proving our initial proposition.

Tuesday, September 05, 2006

Propagation of Errors in Review Articles

Thomas J. Katz signs a small letter in Science warning us about the propagation of errors in review articles. The author gives a scary example of an incorrect citation propagated through 9 reviews (if I counted correctly). The cited paper does not contain the experiment that all the reviews mention and as it seems it was actually never published anywhere. Very scary.

As more science moves online with more individual voices, will this propagation of errors be accentuated or reduced?

Thomas J. Katz signs a small letter in Science warning us about the propagation of errors in review articles. The author gives a scary example of an incorrect citation propagated through 9 reviews (if I counted correctly). The cited paper does not contain the experiment that all the reviews mention and as it seems it was actually never published anywhere. Very scary.

As more science moves online with more individual voices, will this propagation of errors be accentuated or reduced?

Tags: reviews, science, error propagation

How to recognize you have become senior faculty

I am back from holidays and trying to plow trough the RSS feeds/content alerts that accumulated in these two weeks. I might post on couple of things that catch my eye.

Here is a funny editorial from Gregory A Petsko talking about the project to sequence Homo neanderthalensis.

The editorial is actually more about senior faculty members and in particular how to identify one:

- You are senior faculty if you can actually remember when more than 10% of submitted grants got funded.

- You are senior faculty if you can remember when there was only one Nature.

You are senior faculty if you still get a lot of invitations to meetings, but they're all to deliver after-dinner talks.

- You are senior faculty if students sometimes ask you if you ever heard Franklin in person, and they mean Benjamin, not Aretha.

- You are senior faculty if a junior colleague wants to know what it was like before computers, and you can tell her.

- You are senior faculty when the second joint on the little finger of your left hand is the only joint that isn't stiff at the end of a long seminar.

- You are senior faculty if you sleep through most of those long seminars.

- You are senior faculty if you visit the Museum of Natural History, and the dummies in the exhibit of Stone Age man all remind you of people you went to school with.

- You are senior faculty if you find yourself saying "Back in my day" or "When I was your age" at least twice a week.

- You are senior faculty if you actually know what investigator-initiated, hypothesis-driven research means.

- You are senior faculty if you occasionally think that maybe you should attend a faculty meeting once in a while.

- You are senior faculty when your CV includes papers you can't remember writing.

I am back from holidays and trying to plow trough the RSS feeds/content alerts that accumulated in these two weeks. I might post on couple of things that catch my eye.

Here is a funny editorial from Gregory A Petsko talking about the project to sequence Homo neanderthalensis.

The editorial is actually more about senior faculty members and in particular how to identify one:

- You are senior faculty if you can actually remember when more than 10% of submitted grants got funded.

- You are senior faculty if you can remember when there was only one Nature.

You are senior faculty if you still get a lot of invitations to meetings, but they're all to deliver after-dinner talks.

- You are senior faculty if students sometimes ask you if you ever heard Franklin in person, and they mean Benjamin, not Aretha.

- You are senior faculty if a junior colleague wants to know what it was like before computers, and you can tell her.

- You are senior faculty when the second joint on the little finger of your left hand is the only joint that isn't stiff at the end of a long seminar.

- You are senior faculty if you sleep through most of those long seminars.

- You are senior faculty if you visit the Museum of Natural History, and the dummies in the exhibit of Stone Age man all remind you of people you went to school with.

- You are senior faculty if you find yourself saying "Back in my day" or "When I was your age" at least twice a week.

- You are senior faculty if you actually know what investigator-initiated, hypothesis-driven research means.

- You are senior faculty if you occasionally think that maybe you should attend a faculty meeting once in a while.

- You are senior faculty when your CV includes papers you can't remember writing.

Monday, September 04, 2006

Bio::Blogs #3

The third edition of Bio::Blogs was released a couple of days ago in business|bytes|genes|molecules.

I particularly enjoyed the nice discussions going on in evolgen , about the rifts in scientific communities and in Neil's blog regarding structural genomics data.

The next Bio::Blogs will be edited by Sandra Porter. Send your links and offers to host future editions to bioblogs{at}gmail.com.

The third edition of Bio::Blogs was released a couple of days ago in business|bytes|genes|molecules.

I particularly enjoyed the nice discussions going on in evolgen , about the rifts in scientific communities and in Neil's blog regarding structural genomics data.

The next Bio::Blogs will be edited by Sandra Porter. Send your links and offers to host future editions to bioblogs{at}gmail.com.

Tags: bio::blogs, carnivals, blog carnivals

Sunday, August 27, 2006

Bio::Blogs #3 - call for submissions

The third edition of Bio::Blogs will be up on the 1st of September, edited by mndoci. Send your submissions to the usual email bioblogs {at} gmail.com. There were few submissions so far. It might be a slow month if a lot of people took some time off, or maybe most people are waiting for the last day as usual.

I am back from holidays, trying to digest the emails and RSS feeds accumulated in two weeks. I miss the beach already :).

The third edition of Bio::Blogs will be up on the 1st of September, edited by mndoci. Send your submissions to the usual email bioblogs {at} gmail.com. There were few submissions so far. It might be a slow month if a lot of people took some time off, or maybe most people are waiting for the last day as usual.

I am back from holidays, trying to digest the emails and RSS feeds accumulated in two weeks. I miss the beach already :).

Tags: blog carnivals, bio::blogs

Tuesday, August 15, 2006

Interactome Networks conference

I am going for a two week holidays tomorrow. I really need some boring relaxing days by the beach without thinking to much about anything :).

After that I am going to a conference, Interactome Networks on the Wellcome Trust Genome Campus in Hinxton, UK. I will give a short talk about my last project - "Specificity and evolvability in eukaryotic protein interaction networks". I will try to blog some of the talks, either here or in Nodalpoint.

Here is the current list of talks and posters. If by any chance you are not going and are particularly interested in some of the titles let me know and I will try to have a look.

I am going for a two week holidays tomorrow. I really need some boring relaxing days by the beach without thinking to much about anything :).

After that I am going to a conference, Interactome Networks on the Wellcome Trust Genome Campus in Hinxton, UK. I will give a short talk about my last project - "Specificity and evolvability in eukaryotic protein interaction networks". I will try to blog some of the talks, either here or in Nodalpoint.

Here is the current list of talks and posters. If by any chance you are not going and are particularly interested in some of the titles let me know and I will try to have a look.

Tags: networks, conference blogging

Sunday, August 13, 2006

Science Foo Camp

I am to sleepy to post a coherent account of what happened today at scifoo, I'll try to do it tomorrow in Nodalpoint. It's an amazing mixture of people that they gathered here, social/bio/physics/computer scientists, science fiction writers (futurists?), journal editors, open access and open science advocates and googlers.

To ilustrate how chaotic the meeting has been, here is a picture of the session planing board they put up.

So anyone can just grab a pen and fill up a slot. The only problem so far was actually having too many parallel interesting sessions.

I am to sleepy to post a coherent account of what happened today at scifoo, I'll try to do it tomorrow in Nodalpoint. It's an amazing mixture of people that they gathered here, social/bio/physics/computer scientists, science fiction writers (futurists?), journal editors, open access and open science advocates and googlers.

To ilustrate how chaotic the meeting has been, here is a picture of the session planing board they put up.

So anyone can just grab a pen and fill up a slot. The only problem so far was actually having too many parallel interesting sessions.

Tags: scifoo, conference blogging

Thursday, August 10, 2006

Science Foo Camp

I am going to California this weekend to attend the Science Foo Camp. An event organized by Nature/O'Reilly and hosted by Google. Yeap I get to visit the Googleplex :) (I admit it am I geek). It has been fun to see the event getting set up. It started with a wiki page seeded with some ideas from O'Reilly and some instructions. Then everyone started editing their bios and suggesting/offering talks.

I will be blogging my impressions of the event over at Nodalpoint but probably a good way to keep track of the event is to take a look at the scifoo tag in connotea.

(official announcement)

I am going to California this weekend to attend the Science Foo Camp. An event organized by Nature/O'Reilly and hosted by Google. Yeap I get to visit the Googleplex :) (I admit it am I geek). It has been fun to see the event getting set up. It started with a wiki page seeded with some ideas from O'Reilly and some instructions. Then everyone started editing their bios and suggesting/offering talks.

I will be blogging my impressions of the event over at Nodalpoint but probably a good way to keep track of the event is to take a look at the scifoo tag in connotea.

(official announcement)

Tags: scifoo, science, conference

Wednesday, August 09, 2006

Blogging science .. something like science

(via Open Reading frame and Uncertain Principles) This is what happens when you spend to much time in the lab. Dylan Stiles reports on his analysis of ... his own ear wax?! Creative post to say the least. :).

(via Open Reading frame and Uncertain Principles) This is what happens when you spend to much time in the lab. Dylan Stiles reports on his analysis of ... his own ear wax?! Creative post to say the least. :).

Tags: fun in lab, NMR

Tuesday, August 08, 2006

Identifying protein-protein interfaces

There were several interesting papers on protein interaction in the last week. For example, in PLoS Computational Biology, Kim et al described an improvement to a method that classifies interaction interfaces according to their geometry. The results of the analysis are available on their site SCOPPI.

It seems reasonable to believe that there is a limited number of protein interaction types (predicted to be around 10 thousand), much the same way that there is probably a limited number of folds used in nature. This database, along side others like the iPFAM database, provide templates on which to model other protein interactions with reasonable homology. As first proposed by Aloy and Russell, if we know that two proteins interact, for example with a yeast-two-hybrid experiment we might be able to use these databases to identity and model the interaction interface between the two proteins.

Here is an example of two complexes taken from the paper. The nodes and edges view hides the fact that RBP and RCC1 interact through different interfaces.

Although is has been very useful to look at protein networks as of bunch of nodes connected by edges for some analysis it would be much more informative to know what are the interacting interfaces.

The authors used the database to claim that:

- hub proteins interact with proteins using many distinct faces (what they actually show is that domains that interact with many different other domain types have more distint faces);

- two thirds of gene fusions conserve the binding orientation;

- the apparent poor conservation of interfaces is due to the diversity of interactions and partners (in my opinion it is more a suggestion that proof);

- the interfaces common to archae, bacteria and eukaryotes and mostly symmetric homo-dimers, suggesting that asymmetric and hetero interactions evolved from symmetric homo-dimers.

<speculation> Maybe if we could convert the current human interactome into more than nodes and edges we could try to see if some of disease causing polymorphisms can be explained by how they affect the interfaces. Maybe we could use this to do interaction KOs instead of whole proteins </speculation>

There were several interesting papers on protein interaction in the last week. For example, in PLoS Computational Biology, Kim et al described an improvement to a method that classifies interaction interfaces according to their geometry. The results of the analysis are available on their site SCOPPI.

It seems reasonable to believe that there is a limited number of protein interaction types (predicted to be around 10 thousand), much the same way that there is probably a limited number of folds used in nature. This database, along side others like the iPFAM database, provide templates on which to model other protein interactions with reasonable homology. As first proposed by Aloy and Russell, if we know that two proteins interact, for example with a yeast-two-hybrid experiment we might be able to use these databases to identity and model the interaction interface between the two proteins.

Here is an example of two complexes taken from the paper. The nodes and edges view hides the fact that RBP and RCC1 interact through different interfaces.

Although is has been very useful to look at protein networks as of bunch of nodes connected by edges for some analysis it would be much more informative to know what are the interacting interfaces.

The authors used the database to claim that:

- hub proteins interact with proteins using many distinct faces (what they actually show is that domains that interact with many different other domain types have more distint faces);

- two thirds of gene fusions conserve the binding orientation;

- the apparent poor conservation of interfaces is due to the diversity of interactions and partners (in my opinion it is more a suggestion that proof);

- the interfaces common to archae, bacteria and eukaryotes and mostly symmetric homo-dimers, suggesting that asymmetric and hetero interactions evolved from symmetric homo-dimers.

<speculation> Maybe if we could convert the current human interactome into more than nodes and edges we could try to see if some of disease causing polymorphisms can be explained by how they affect the interfaces. Maybe we could use this to do interaction KOs instead of whole proteins </speculation>

Saturday, August 05, 2006

PLoS ONE is accepting manuscripts - Update

(via PLoS blog and Open) The new PLoS ONE journal is now accepting manuscripts for review.They are still taking care of a few bugs and at this moments I could only use the site with Internet Explorer.

We can know have a look at the editorial board and the journal policies. There are some hints of how the user comments and ratings are going to work.

Most journals have some form of funneling, either by perceived impact or subject scope. It is going to be interesting to see what happens with PLoS ONE, given that there is no editorial selection on these criteria.

Update - It is actually working fine with Firefox1.5. When I was seeing it before the the page layout was misbehaving. Sorry for the confusion.

(via PLoS blog and Open) The new PLoS ONE journal is now accepting manuscripts for review.

We can know have a look at the editorial board and the journal policies. There are some hints of how the user comments and ratings are going to work.

Most journals have some form of funneling, either by perceived impact or subject scope. It is going to be interesting to see what happens with PLoS ONE, given that there is no editorial selection on these criteria.

Update - It is actually working fine with Firefox1.5. When I was seeing it before the the page layout was misbehaving. Sorry for the confusion.

Tags: open access, PLoS, PLoS ONE

Friday, August 04, 2006

Meta blogging

Neil Saunders' blog as moved to a new home. Fabrice Jossinet is back blogging about RNA and bioinformatics in Propeller Twist.

If you are interested in RNA and microbiology go check out the new blog of Rosie Redfield. She runs a a microbiology lab at the University of British Columbia and will be blogging about their current research.

If you are interested in a better way to track the comments and responses to your comments in other blogs have a look at coComments. You can have an RSS feed and/or a box in your blog with your comments and responses to the comments that you wish to track.

Neil Saunders' blog as moved to a new home. Fabrice Jossinet is back blogging about RNA and bioinformatics in Propeller Twist.

If you are interested in RNA and microbiology go check out the new blog of Rosie Redfield. She runs a a microbiology lab at the University of British Columbia and will be blogging about their current research.

If you are interested in a better way to track the comments and responses to your comments in other blogs have a look at coComments. You can have an RSS feed and/or a box in your blog with your comments and responses to the comments that you wish to track.

Tags: "meta blogging"

Wednesday, August 02, 2006

Tangled Bank #59

The latest issue of Tangled Bank is up at Science and Reason. It is the first time I participate with a submission. If you are into physics the author of the blog, Charles Daney, is considering starting a physics carnival.

The latest issue of Tangled Bank is up at Science and Reason. It is the first time I participate with a submission. If you are into physics the author of the blog, Charles Daney, is considering starting a physics carnival.

Tags: blog carnivals, science

Tuesday, August 01, 2006

Bio::Blogs#2

The second edition of Bio::Blogs is up at Neil's blog. Go check it out and participate with your insightful comments :). There is a lot of conference blogging on this issue, a nice way to get updates on the conferences you might have missed.

I am happy to say that we have volunteers for the next two editions. Deepak offered to host for September 1st and Sandra Porter will host the October 1st edition.

The second edition of Bio::Blogs is up at Neil's blog. Go check it out and participate with your insightful comments :). There is a lot of conference blogging on this issue, a nice way to get updates on the conferences you might have missed.

I am happy to say that we have volunteers for the next two editions. Deepak offered to host for September 1st and Sandra Porter will host the October 1st edition.

Tags: blog carnivals, bio::blogs

Saturday, July 29, 2006

The likelihood that two proteins interact might depend on the proteins' age - part 2

Abstract

It has been previously shown [1] that S. cerevisiae proteins preferentially interact with proteins of the same estimated likely time of origin. Using a similar approach but focusing on a less broad evolutionary time span I observed that the likelihood for protein interactions depends on the proteins’ age. I had show this previously for the interactome of S. cerevisiae [2] and here I extend the analysis to show that the same is also observed for the interactome of H. sapiens. Importantly the observation does not depend on the experimental method used since removing the yeast-two-hybrid interactions does not alter the result.

Methods and Results

Protein-protein interactions for H.sapiens were obtained from the Human Protein Reference database and from two high-throughput studies excluding any interactions derived from protein complexes. I considered only proteins that were represented in this interactome (i.e. with one or more interactions).

As before I created groups of H. sapiens proteins with different average age using the reciprocal best blast hit method to determine the most likely ortholog in eleven other eukaryotic species (see figure 1 for species names). For a more detailed description of the group selection and the construction of the phylogenetic tree please see the previous post [2].

It is important to note that the placement of C. familiaris does not correspond with other published phylogenetic trees it might be due to the proteins selected for the tree construction. I should consider using different combinations of ancestral proteins to check the robustness of the tree.

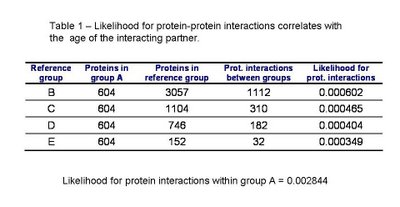

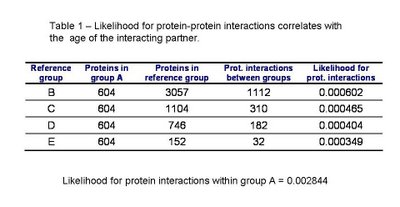

In table 1 we can see the likelihood for protein interactions to occur within the ancestral proteins of group A and between the ancestral proteins and other groups of decreasing average age. As published by Qin et al. and as I had observed before for S. cerevisiae, the interactions within groups of the same age (group A) are more likely than between groups of proteins of different times of origin. Also, the likelihood for a protein to interact with an ancestral protein depends on the age of this protein. Confirming the pervious observation that the younger the protein is the less likely it is to interact with an ancestral protein.

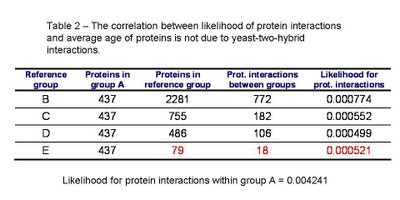

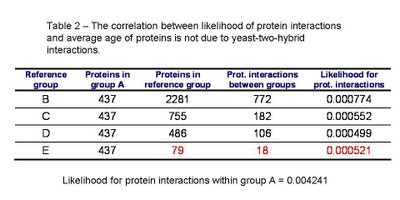

I redid the analysis excluding yeast-two-hybrid interactions from the dataset. As it can be see in table 2, the results are qualitatively the same. There is a small increase in the likelihood of interaction with the ancestral proteins for the youngest group (highlighted in red in table 2) that is likely due to lack of data.

Caveats and possible continuations

I still have to test the statistical significance of these observations and control for possible other effects like protein size and protein expression that could explain these results.

I am interested in continuing this further as an open project. Fallowing the suggestion of Roland Krause I will soon start a wiki page to dump the data bits accumulated for open discussion. Hopefully more people will join in and maybe we can together shape up a small communication.

[1]Qin H, Lu HH, Wu WB, Li WH. Evolution of the yeast protein interaction network. Proc Natl Acad Sci U S A. 2003 Oct 28;100(22):12820-4. Epub 2003 Oct 13

[2]Beltrao. P The likelihood that two proteins interact might depend on the proteins' age Blog post

Abstract

It has been previously shown [1] that S. cerevisiae proteins preferentially interact with proteins of the same estimated likely time of origin. Using a similar approach but focusing on a less broad evolutionary time span I observed that the likelihood for protein interactions depends on the proteins’ age. I had show this previously for the interactome of S. cerevisiae [2] and here I extend the analysis to show that the same is also observed for the interactome of H. sapiens. Importantly the observation does not depend on the experimental method used since removing the yeast-two-hybrid interactions does not alter the result.

Methods and Results

Protein-protein interactions for H.sapiens were obtained from the Human Protein Reference database and from two high-throughput studies excluding any interactions derived from protein complexes. I considered only proteins that were represented in this interactome (i.e. with one or more interactions).

As before I created groups of H. sapiens proteins with different average age using the reciprocal best blast hit method to determine the most likely ortholog in eleven other eukaryotic species (see figure 1 for species names). For a more detailed description of the group selection and the construction of the phylogenetic tree please see the previous post [2].

It is important to note that the placement of C. familiaris does not correspond with other published phylogenetic trees it might be due to the proteins selected for the tree construction. I should consider using different combinations of ancestral proteins to check the robustness of the tree.

In table 1 we can see the likelihood for protein interactions to occur within the ancestral proteins of group A and between the ancestral proteins and other groups of decreasing average age. As published by Qin et al. and as I had observed before for S. cerevisiae, the interactions within groups of the same age (group A) are more likely than between groups of proteins of different times of origin. Also, the likelihood for a protein to interact with an ancestral protein depends on the age of this protein. Confirming the pervious observation that the younger the protein is the less likely it is to interact with an ancestral protein.

I redid the analysis excluding yeast-two-hybrid interactions from the dataset. As it can be see in table 2, the results are qualitatively the same. There is a small increase in the likelihood of interaction with the ancestral proteins for the youngest group (highlighted in red in table 2) that is likely due to lack of data.

Caveats and possible continuations

I still have to test the statistical significance of these observations and control for possible other effects like protein size and protein expression that could explain these results.

I am interested in continuing this further as an open project. Fallowing the suggestion of Roland Krause I will soon start a wiki page to dump the data bits accumulated for open discussion. Hopefully more people will join in and maybe we can together shape up a small communication.

[1]Qin H, Lu HH, Wu WB, Li WH. Evolution of the yeast protein interaction network. Proc Natl Acad Sci U S A. 2003 Oct 28;100(22):12820-4. Epub 2003 Oct 13

[2]Beltrao. P The likelihood that two proteins interact might depend on the proteins' age Blog post

Tags: protein interactions, evolution, networks

Friday, July 28, 2006

Binding specificity and complexity

There is a paper out in PNAS about the distribution of free energy of binding for the yeast-two-hybrid datasets. Although I still have to dig into the model they used I found the result quite interesting. They observe that the average binding energy decreases with cellular complexity.

They have some sentences in there that made my hairs stand like: "more evolved organisms have weaker binary protein-protein binding". What does "more evolved" mean ? Also on figure 4 of the paper they plot miu (a parameter related to the average binding energy) over divergence times without saying what species they are comparing.

This result fits well with another paper published a while ago in PLoS Comp Bio about protein family expansions and complexity. Christine Vogel and Cyrus Chothia show (among other things) what protein domains expansion best correlate with complexity. They used cell numbers as a proxy for species complexity. If you look at the top of the list (in table 2) you can find several of the peptide binding domains, know to be of low specificity, given that they do not require a folded structure to interact with.

What I would like to know is the correlation between binding affinity and binding specificity. For example SH2 domains bind much more tightly than SH3 domains although they are both not very specific binding domains. Maybe in general it could be said that average lower binding affinities correspond to lower average binding specificity.

Why would complexity correlate with binding specificity ? I think one important factor is cellular size. An increase is size has allowed for exploration of spacial factors in determining cellular response. Specificity of binding in the real cell (not in binary assays) is determined also by localization at sub cellular structures.

One practical reminder coming from this is that even if we have the perfect method to determine biophysical binding specificity we are still going to get poor results if we cannot predict all other components that will determine if the two proteins will bind or not (i.e localization, expression).

There is a paper out in PNAS about the distribution of free energy of binding for the yeast-two-hybrid datasets. Although I still have to dig into the model they used I found the result quite interesting. They observe that the average binding energy decreases with cellular complexity.

They have some sentences in there that made my hairs stand like: "more evolved organisms have weaker binary protein-protein binding". What does "more evolved" mean ? Also on figure 4 of the paper they plot miu (a parameter related to the average binding energy) over divergence times without saying what species they are comparing.

This result fits well with another paper published a while ago in PLoS Comp Bio about protein family expansions and complexity. Christine Vogel and Cyrus Chothia show (among other things) what protein domains expansion best correlate with complexity. They used cell numbers as a proxy for species complexity. If you look at the top of the list (in table 2) you can find several of the peptide binding domains, know to be of low specificity, given that they do not require a folded structure to interact with.

What I would like to know is the correlation between binding affinity and binding specificity. For example SH2 domains bind much more tightly than SH3 domains although they are both not very specific binding domains. Maybe in general it could be said that average lower binding affinities correspond to lower average binding specificity.

Why would complexity correlate with binding specificity ? I think one important factor is cellular size. An increase is size has allowed for exploration of spacial factors in determining cellular response. Specificity of binding in the real cell (not in binary assays) is determined also by localization at sub cellular structures.

One practical reminder coming from this is that even if we have the perfect method to determine biophysical binding specificity we are still going to get poor results if we cannot predict all other components that will determine if the two proteins will bind or not (i.e localization, expression).

TOPAZ and PLoS ONE

According to the PLoS blog the new PLoS ONE will be accepting submissions soon. I guess they will at the same time release the TOPAZ system that will likely be available here.

According to the PLoS blog the new PLoS ONE will be accepting submissions soon. I guess they will at the same time release the TOPAZ system that will likely be available here.

"TOPAZ will serve the rapidly growing demand for sophisticated tools and resources to read and use the scientific and medical literature, allowing scholarly publishers, societies, universities, and research communities to publish open access journals economically and efficiently."

Tags: open access, plos, topaz

Sunday, July 23, 2006

Opening up the scientific process

During my stay at the EMBL, for the past couple of years, it already happened more than once that people I know have been scooped. This simple means that all the hard work that they have been doing was already done by someone else that manage to publish it a bit sooner and therefore limited severely the usefulness of their discoveries. Very few journals are interested in publishing research that merely confirms other published results.

From talking to other people, I have come to accept that scooping is a part of science. There is no other possible conclusion from this but to accept that the scientific process is very flawed. We should not be wasting resources literally racing with each other to be the first person to discover something. When you try to explain to non-scientist, that it is very common to have 3 or 4 labs doing exactly the same thing they usually have a hard time integrating this with their perception of science as the pursue of knowledge trough collaboration.

I am probably naïve given that I am only doing this for a couple of years but I don’t pretend to say that we do not need competition in science. We need to keep each other in check exactly because lack of competition leads to waste of resources. I would argue however that right now the scientific process is creating competition at wrong levels decreasing the potential productivity.

So how do we work and what do we aim to produce? We are in the business of producing manuscripts accepted in peer reviewed journals. To have competition there most be a scarce element. In our case the limited element is the attention of fellow scientist. Given that scientist’s attention is scarce we all compete for the limited number of time that researchers have to read papers every week. So the good news is that the system tends to give credit to high quality manuscripts. This means that research projects and ongoing results should be absolutely confidential and everything should be focused in getting that Science or Nature paper.

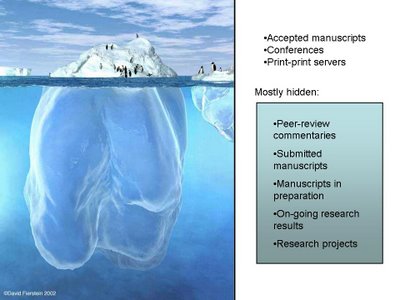

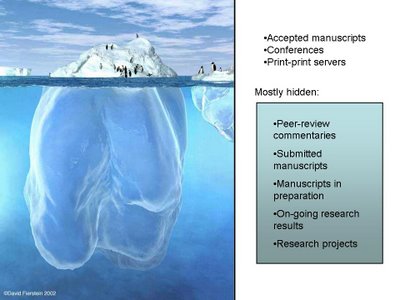

I found a beautiful drawing of an iceberg (used here with permission from the author, David Fierstein) that I think illustrates the problem we have today by focusing the competition on the manuscripts. Only a small fraction of the research process is in view.

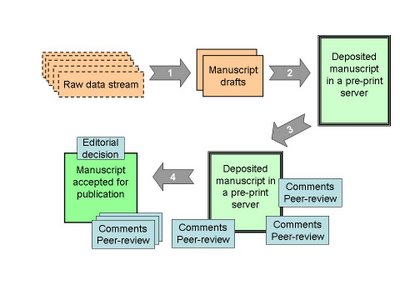

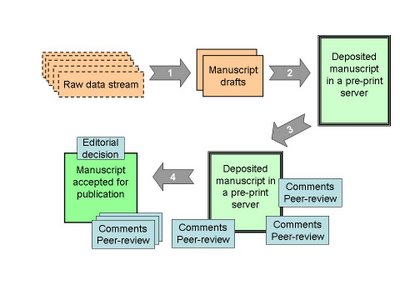

Wouldn’t it be great if we could find a way to make most of the scientific process public but at the same time guaranty some level of competition? What I think we could do would be to define steps in the process that we could say are independent, which can work as modules. Here I mean module in the sense of a black box with inputs and outputs that we wire together without caring too much on how the internals of the boxes work. I am thinking these days about these modules and here is a first draft of what this could look like:

The data streams would be, as the name suggests, a public view of the data being produced by a group or individual researcher. Blogs are a simple way this could be achieved today (see for example this blog). The manuscripts could be built in wikis by selection of relevant data bits from the streams that fit together to answer an interesting question. This is where I propose that the competition would come in. Only those relevant bits of data that better answer the question would be used. The authors of the manuscript would be all those that contributed data bits or in some other way contributed for the manuscript creation. In this way all the data would be public and still a healthy level of competition would be maintained.

The rest of the process could go on in public view. Versions of the manuscript deemed stable could be deposited in a pre-print server and comments and peer review would commence. Latter there could still be another step of competition to get the paper formally accepted in a journal.

One advantage of this is that it is not a revolution of the scientific process. People could still work in their normal research environment closed within their research groups. This is just a model of how we could extend the system to make it mostly open and public. The technologies are all here: structured blogging for the data streams, wikis for the manuscripts and online communities to drive the research agendas.

I think it is important to view the scientific process as a group of modules also because it allows us latter to think of different ways to wire the modules together. Increasing the modularity should permit us to innovate. For example we can latter think of ways that the data streams are brought together to answer questions, etc.

During my stay at the EMBL, for the past couple of years, it already happened more than once that people I know have been scooped. This simple means that all the hard work that they have been doing was already done by someone else that manage to publish it a bit sooner and therefore limited severely the usefulness of their discoveries. Very few journals are interested in publishing research that merely confirms other published results.

From talking to other people, I have come to accept that scooping is a part of science. There is no other possible conclusion from this but to accept that the scientific process is very flawed. We should not be wasting resources literally racing with each other to be the first person to discover something. When you try to explain to non-scientist, that it is very common to have 3 or 4 labs doing exactly the same thing they usually have a hard time integrating this with their perception of science as the pursue of knowledge trough collaboration.

I am probably naïve given that I am only doing this for a couple of years but I don’t pretend to say that we do not need competition in science. We need to keep each other in check exactly because lack of competition leads to waste of resources. I would argue however that right now the scientific process is creating competition at wrong levels decreasing the potential productivity.

So how do we work and what do we aim to produce? We are in the business of producing manuscripts accepted in peer reviewed journals. To have competition there most be a scarce element. In our case the limited element is the attention of fellow scientist. Given that scientist’s attention is scarce we all compete for the limited number of time that researchers have to read papers every week. So the good news is that the system tends to give credit to high quality manuscripts. This means that research projects and ongoing results should be absolutely confidential and everything should be focused in getting that Science or Nature paper.

I found a beautiful drawing of an iceberg (used here with permission from the author, David Fierstein) that I think illustrates the problem we have today by focusing the competition on the manuscripts. Only a small fraction of the research process is in view.

Wouldn’t it be great if we could find a way to make most of the scientific process public but at the same time guaranty some level of competition? What I think we could do would be to define steps in the process that we could say are independent, which can work as modules. Here I mean module in the sense of a black box with inputs and outputs that we wire together without caring too much on how the internals of the boxes work. I am thinking these days about these modules and here is a first draft of what this could look like:

The data streams would be, as the name suggests, a public view of the data being produced by a group or individual researcher. Blogs are a simple way this could be achieved today (see for example this blog). The manuscripts could be built in wikis by selection of relevant data bits from the streams that fit together to answer an interesting question. This is where I propose that the competition would come in. Only those relevant bits of data that better answer the question would be used. The authors of the manuscript would be all those that contributed data bits or in some other way contributed for the manuscript creation. In this way all the data would be public and still a healthy level of competition would be maintained.

The rest of the process could go on in public view. Versions of the manuscript deemed stable could be deposited in a pre-print server and comments and peer review would commence. Latter there could still be another step of competition to get the paper formally accepted in a journal.

One advantage of this is that it is not a revolution of the scientific process. People could still work in their normal research environment closed within their research groups. This is just a model of how we could extend the system to make it mostly open and public. The technologies are all here: structured blogging for the data streams, wikis for the manuscripts and online communities to drive the research agendas.

I think it is important to view the scientific process as a group of modules also because it allows us latter to think of different ways to wire the modules together. Increasing the modularity should permit us to innovate. For example we can latter think of ways that the data streams are brought together to answer questions, etc.

Tags: open science, science2.0, science

Friday, July 21, 2006

Bio::Blogs #2 - call for submissions

(via Nodalpoint) This is just a quick reminder that we have 10 days to submit links to the second edition of Bio::Blogs. You can send your suggestions to bioblogs {at} gmail.com. Also if you wish to host future editions send in a quick email with your name and link to your blog to the same email address.

(via Nodalpoint) This is just a quick reminder that we have 10 days to submit links to the second edition of Bio::Blogs. You can send your suggestions to bioblogs {at} gmail.com. Also if you wish to host future editions send in a quick email with your name and link to your blog to the same email address.

Tags: bio blogs, blog carnival, bioinformatics

Monday, July 17, 2006

Conference on Systems Biology of Mammalian Cells

There was a Systems Biology conference here in Heidelberg last week. For those interested the recorded talks are now available on their site. There is a lot of interesting things about the behavior of network motifs and about network modeling.

There was a Systems Biology conference here in Heidelberg last week. For those interested the recorded talks are now available on their site. There is a lot of interesting things about the behavior of network motifs and about network modeling.

Tags: systems biology, conference, webcast, science

Sunday, July 16, 2006

Blog changes

Notes from the Biomass is back again in a new website. I was cleaning the links on the blog to better reflect what I am actually reading and while I was at it I changed the template. It looks better in IE than in Firefox but I really don't have the time nor the ability to work on a good design.

Notes from the Biomass is back again in a new website. I was cleaning the links on the blog to better reflect what I am actually reading and while I was at it I changed the template. It looks better in IE than in Firefox but I really don't have the time nor the ability to work on a good design.

Tuesday, July 11, 2006

Defrag my life

I am taking the week to visit my former lab in Aveiro, Portugal where I spent one year trying to understand how a codon reassignment occurred in the evolutionary past of C. albicans. This was where I first got into Perl and the wonders of comparative genomics.

It brings back a lot of memories every time I come back to one of the cities I lived in before (6 cities and counting) and I sometimes wonder if it is really necessary for scientists to live such fragmented lives.

reboot, restart, new program.

The regular programming will return soon :).

I am taking the week to visit my former lab in Aveiro, Portugal where I spent one year trying to understand how a codon reassignment occurred in the evolutionary past of C. albicans. This was where I first got into Perl and the wonders of comparative genomics.

It brings back a lot of memories every time I come back to one of the cities I lived in before (6 cities and counting) and I sometimes wonder if it is really necessary for scientists to live such fragmented lives.

reboot, restart, new program.

The regular programming will return soon :).

Tuesday, July 04, 2006

Re: The ninth wave

I usually reed Gregory A Petsko' comments and editorials in Genome Biology that are unfortunately only available with subscription. In the last edition of the journal he wrote a comment entitled "The ninth wave". I have lived most of my life 10min away from the Atlantic ocean and at least to my recollection we used to talk about the 7th wave not the ninth as the biggest wave in a set of waves, but this it not the point :).

Petsko argues that the increase of free access to information on the web and of computer savvy investigators presents a clear danger of a flood of useless correlations hinting at potential discoveries never followed by careful experimental work:

This reminded me of a review I read recently from Andy Clark (via Evolgen). Andy Clark talks about the huge increase of researchers in comparative genomics:

I have a feeling that this is the opinion of a lot of researchers. There is this generalized consensus that people working on computational biology have it easy. Sitting at the computer all day, inventing correlations with other people's data.

Maybe some people feel this way because it is relatively fast to go from idea to result using computers if you have in a mind clearly what you want to test while the experimental work certainly takes longer.

Why should I re-do the experimental work if I can answer a question that I think is interesting using available information ? I should be criticized if I try to overinterpret the results, if the methods used are not appropriate or if the question is not relevant but I should not be criticized for looking for an answer the fastest way I can.

I usually reed Gregory A Petsko' comments and editorials in Genome Biology that are unfortunately only available with subscription. In the last edition of the journal he wrote a comment entitled "The ninth wave". I have lived most of my life 10min away from the Atlantic ocean and at least to my recollection we used to talk about the 7th wave not the ninth as the biggest wave in a set of waves, but this it not the point :).

Petsko argues that the increase of free access to information on the web and of computer savvy investigators presents a clear danger of a flood of useless correlations hinting at potential discoveries never followed by careful experimental work:

Computational analysis of someone else's data, on the other hand, always produces results, and all too often no one but the cognoscenti can tell if these results mean anything.

This reminded me of a review I read recently from Andy Clark (via Evolgen). Andy Clark talks about the huge increase of researchers in comparative genomics:

...one of its worst disasters is that it has created a hoard of genomics investigators who think that evolutionary biology is just fun, speculative story telling. Sadly, much of the scientific publication industry seems to respond to the herd as much as it does to scientific rigor, and so we have a bit of a mess on our hands.

I have a feeling that this is the opinion of a lot of researchers. There is this generalized consensus that people working on computational biology have it easy. Sitting at the computer all day, inventing correlations with other people's data.

Maybe some people feel this way because it is relatively fast to go from idea to result using computers if you have in a mind clearly what you want to test while the experimental work certainly takes longer.

Why should I re-do the experimental work if I can answer a question that I think is interesting using available information ? I should be criticized if I try to overinterpret the results, if the methods used are not appropriate or if the question is not relevant but I should not be criticized for looking for an answer the fastest way I can.

Tags: bioinformatics, meme

Monday, July 03, 2006

Journal policies on preprint servers (2)

Recently I did a survey on the different journal policies regarding preprint servers. I am interested in this because I feel it is important to separate the peer review process from the time-stamping (submission) of a scientific communication. Establishing this separation allows for exploration of alternative and parallel ways of determining the value of a scientific communication. This is only possible if journals accept manuscripts previously deposited in pre-print servers.

Today I received the answer from Bioinformatics:

If you also think that this model, already very established in physics and maths, is useful you can also sent some mails to your journals of interest to enquire about their policies. If enough authors voice their interest there will be more journals accepting manuscripts from pre-print servers.

I think we are now lacking a biomedical preprint server. The Genome Biology journal served until early this year also as a preprint server but they discontinued this practice. Maybe arxiv could expand to include biomedical manuscripts (they already accept quantitative biology manuscripts) .

Recently I did a survey on the different journal policies regarding preprint servers. I am interested in this because I feel it is important to separate the peer review process from the time-stamping (submission) of a scientific communication. Establishing this separation allows for exploration of alternative and parallel ways of determining the value of a scientific communication. This is only possible if journals accept manuscripts previously deposited in pre-print servers.

Today I received the answer from Bioinformatics:

"The Executive Editors have advised that we will allow authors to submit manuscripts to a preprint archive."

If you also think that this model, already very established in physics and maths, is useful you can also sent some mails to your journals of interest to enquire about their policies. If enough authors voice their interest there will be more journals accepting manuscripts from pre-print servers.

I think we are now lacking a biomedical preprint server. The Genome Biology journal served until early this year also as a preprint server but they discontinued this practice. Maybe arxiv could expand to include biomedical manuscripts (they already accept quantitative biology manuscripts) .

Tags: preprint server, bioinformatics, peer review, science

Saturday, July 01, 2006

Bio::Blogs # 1

An editorial of sorts

Welcome to the first edition of Bio::Blogs, a blog carnival covering all subjects related to bioinformatics and computational biology. The main objectives of Bio::Blogs are, in my opinion, to help nit together the bioinformatics blogging community and to showcase some interesting posts on these subjects to other communities. Hopefully it will serve as incentive for other people in the area to start their own blogs and to join in the conversation.

I get to host this edition and I decided to format it more or less like a journal with three sections:1) Conference reports; 2) Primers and reviews; 3) Blog articles. I think this reflects also my opinion on what could be a future role of these carnivals, to serve as a path for certification of scientific content parallel to the current scientific journals.

Given that there were so few submissions I added some links myself. Hopefully in the next editions we can get some more publicity and participation :). Talking about future editions, the second edition of Bio::Blogs will be hosted by Neil and we have now a full month to make something up in our blogs and submit the link to bioblogs{at}gmail{dot}com.

Conference Reports

I selected a blog post from Alf describing what was discussed in a recent conference dedicated to Data Webs. There is a lot of information about potential ways to deal with the increase of data submitted all over the web in many different formats. I remember seeing the advert for this conference and I was intrigued to see Philip Bourne, the editor-in-chief of PLoS Computational Biology, among the speakers. I see know that he is involved in publishing tools under development in PLoS.

Primers & Reviews

Stew from Flags and Lollipops sent in this link to a review on the use of bioinformatics to hunt for disease related genes. He highlights a series of tools and methods that can be used to prioritize candidate genes for experimental validation.

Neil, the next host of Bio::Blogs spent some time with the BioPerl package called Bio::Graphics. He dedicated a blog entry to explain how to create graphics for your results with this package. He gives examples on how to make graphic representations of sequences mapped with blast hits and phosphorylation sites.

Chris, a usual around Nodalpoint, nominated a post in Evolgen:

Evolgen has an interesting post about the relative importance (and interest in) cis and trans acting genetic variation in evo-devo. A lot of (computational) energy has thus far been expended in finding regulatory motifs close to genes (ie, within promoter regions), and conserved elements in non-coding sequences. Rather predictably, cis-acting variants have received the lion's share of attention, probably because they present a more tractable problem. The post deals with work from the evo-devo and comparative genomics fields, but these problems have also been attacked from within-species variation perspectives, particularly the genetics of gene expression. But that's next month's post...

Blog articles

I get to link to my last post. I present some very preliminary results on the influence of protein age on the likelihood of protein-protein interactions. Have fun pointing out all the likely flaws in reasoning and hopefully useful ways to build on it.

To wrap things up here is an announcement by Pierre of a possibly useful applet implementing a Genetic Programming Algorithm. If you ever wanted to play around with genetic programming you can have a go with his applet.

That is it for this month. It is a short Bio::Blogs but I hope you find some of these things useful. Don’t forget to submit the links for the next edition before the end of July. Neil will take up the editorial role for #2 in his blog. If you know of a nice symbol that we might use for Bio::Blogs sent it in as well.

An editorial of sorts

Welcome to the first edition of Bio::Blogs, a blog carnival covering all subjects related to bioinformatics and computational biology. The main objectives of Bio::Blogs are, in my opinion, to help nit together the bioinformatics blogging community and to showcase some interesting posts on these subjects to other communities. Hopefully it will serve as incentive for other people in the area to start their own blogs and to join in the conversation.

I get to host this edition and I decided to format it more or less like a journal with three sections:1) Conference reports; 2) Primers and reviews; 3) Blog articles. I think this reflects also my opinion on what could be a future role of these carnivals, to serve as a path for certification of scientific content parallel to the current scientific journals.

Given that there were so few submissions I added some links myself. Hopefully in the next editions we can get some more publicity and participation :). Talking about future editions, the second edition of Bio::Blogs will be hosted by Neil and we have now a full month to make something up in our blogs and submit the link to bioblogs{at}gmail{dot}com.

Conference Reports

I selected a blog post from Alf describing what was discussed in a recent conference dedicated to Data Webs. There is a lot of information about potential ways to deal with the increase of data submitted all over the web in many different formats. I remember seeing the advert for this conference and I was intrigued to see Philip Bourne, the editor-in-chief of PLoS Computational Biology, among the speakers. I see know that he is involved in publishing tools under development in PLoS.

Primers & Reviews

Stew from Flags and Lollipops sent in this link to a review on the use of bioinformatics to hunt for disease related genes. He highlights a series of tools and methods that can be used to prioritize candidate genes for experimental validation.

Neil, the next host of Bio::Blogs spent some time with the BioPerl package called Bio::Graphics. He dedicated a blog entry to explain how to create graphics for your results with this package. He gives examples on how to make graphic representations of sequences mapped with blast hits and phosphorylation sites.

Chris, a usual around Nodalpoint, nominated a post in Evolgen:

Evolgen has an interesting post about the relative importance (and interest in) cis and trans acting genetic variation in evo-devo. A lot of (computational) energy has thus far been expended in finding regulatory motifs close to genes (ie, within promoter regions), and conserved elements in non-coding sequences. Rather predictably, cis-acting variants have received the lion's share of attention, probably because they present a more tractable problem. The post deals with work from the evo-devo and comparative genomics fields, but these problems have also been attacked from within-species variation perspectives, particularly the genetics of gene expression. But that's next month's post...

Blog articles

I get to link to my last post. I present some very preliminary results on the influence of protein age on the likelihood of protein-protein interactions. Have fun pointing out all the likely flaws in reasoning and hopefully useful ways to build on it.

To wrap things up here is an announcement by Pierre of a possibly useful applet implementing a Genetic Programming Algorithm. If you ever wanted to play around with genetic programming you can have a go with his applet.

That is it for this month. It is a short Bio::Blogs but I hope you find some of these things useful. Don’t forget to submit the links for the next edition before the end of July. Neil will take up the editorial role for #2 in his blog. If you know of a nice symbol that we might use for Bio::Blogs sent it in as well.

The likelihood that two proteins interact might depend on the proteins' age

Abstract

It has been previously shown[1] that S. cerevisiae proteins preferentially interact with proteins of the same estimated likely time of origin. Using a similar approach but focusing on a less broad evolutionary time span I observed that the likelihood for protein interactions depends on the proteins’ age.

Methods and Results

Protein-protein interactions for S. cerevisiae were obtained from BIND, excluding any interactions derived from protein complexes. I considered only proteins that were represented in this interactome (i.e. with one or more interactions).

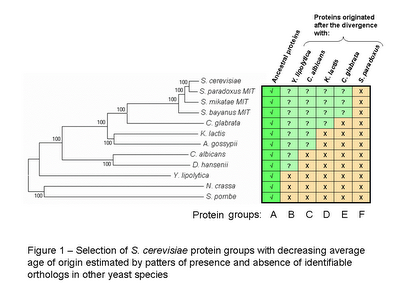

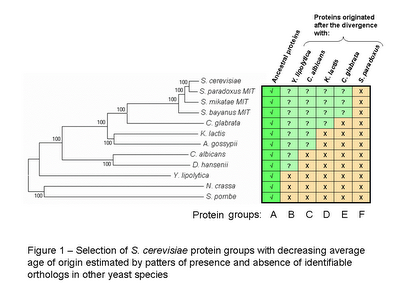

In order to create groups of S. cerevisiae proteins with different average age I used the reciprocal best blast hit method to determine the most likely ortholog in eleven other yeast species (see figure 1 for species names).

S. cerevisiae proteins with orthologs in all species were considered to be ancestral proteins and were grouped into group A. To obtain groups of proteins with decreasing average age of origin, S. cerevisiae proteins were selected according to the absence of identifiable orthologs in other species (see figure 1). It is important to note that these groups of decreasing average protein age are overlapping. Group F is contained in E , both are contained in D and so forth. I could have selected non overlapping groups of proteins with decreasing time of origin but the lower numbers obtained might in a latter stage make statistical analysis more difficult.

The phylogenetic tree in figure 1 (obtained with MEGA 3.1) is a neighbourhood joining tree obtained by concatenating 10 proteins from the ancestral group A. I did it mostly to avoid copyrighted images and too have a graphical representation of the species divergence.

To determine the effect of protein age on the likelihood of interaction with ancestral proteins I counted the number of interactions between group A and the other groups of proteins (see table 1).

From the data it is possible to observe that protein-interactions within groups (within group A) is more likely than protein-interactions between groups. This is in agreement with the results from Qin et al.[1]. Also the likelihood for a protein to interact with an ancestral protein depends on the age of this protein. This simple analysis suggests that the younger the protein is the less likely it is to interact with an ancestral protein.

One possible use of this observation, if it holds to further scrutiny, would be to use the likely time of origin of the proteins as information to include in protein-protein prediction algorithms.

Caveats and possible continuations

The protein-protein interactions used here also contain the high-throughput studies and therefore the interactome used should be considered with caution. I might redo this analysis with a recent set of interactions compiled from the literature[2] but this will also introduce some bias into the interactome.

I should do some statistical analysis to determine if the differences observed are at all significant. If the differences are significant I should try to correlate the likelihood of interactions with a quantitative measure like average protein identity.

References

[1]Qin H, Lu HH, Wu WB, Li WH. Evolution of the yeast protein interaction network. Proc Natl Acad Sci U S A. 2003 Oct 28;100(22):12820-4. Epub 2003 Oct 13

[2]Reguly T, Breitkreutz A, Boucher L, et al. Comprehensive curation and analysis of global interaction networks in Saccharomyces cerevisiae. J Biol. 2006 Jun 8;5(4):11 [Epub ahead of print]

Abstract

It has been previously shown[1] that S. cerevisiae proteins preferentially interact with proteins of the same estimated likely time of origin. Using a similar approach but focusing on a less broad evolutionary time span I observed that the likelihood for protein interactions depends on the proteins’ age.

Methods and Results

Protein-protein interactions for S. cerevisiae were obtained from BIND, excluding any interactions derived from protein complexes. I considered only proteins that were represented in this interactome (i.e. with one or more interactions).

In order to create groups of S. cerevisiae proteins with different average age I used the reciprocal best blast hit method to determine the most likely ortholog in eleven other yeast species (see figure 1 for species names).

S. cerevisiae proteins with orthologs in all species were considered to be ancestral proteins and were grouped into group A. To obtain groups of proteins with decreasing average age of origin, S. cerevisiae proteins were selected according to the absence of identifiable orthologs in other species (see figure 1). It is important to note that these groups of decreasing average protein age are overlapping. Group F is contained in E , both are contained in D and so forth. I could have selected non overlapping groups of proteins with decreasing time of origin but the lower numbers obtained might in a latter stage make statistical analysis more difficult.

The phylogenetic tree in figure 1 (obtained with MEGA 3.1) is a neighbourhood joining tree obtained by concatenating 10 proteins from the ancestral group A. I did it mostly to avoid copyrighted images and too have a graphical representation of the species divergence.

To determine the effect of protein age on the likelihood of interaction with ancestral proteins I counted the number of interactions between group A and the other groups of proteins (see table 1).

From the data it is possible to observe that protein-interactions within groups (within group A) is more likely than protein-interactions between groups. This is in agreement with the results from Qin et al.[1]. Also the likelihood for a protein to interact with an ancestral protein depends on the age of this protein. This simple analysis suggests that the younger the protein is the less likely it is to interact with an ancestral protein.

One possible use of this observation, if it holds to further scrutiny, would be to use the likely time of origin of the proteins as information to include in protein-protein prediction algorithms.

Caveats and possible continuations

The protein-protein interactions used here also contain the high-throughput studies and therefore the interactome used should be considered with caution. I might redo this analysis with a recent set of interactions compiled from the literature[2] but this will also introduce some bias into the interactome.

I should do some statistical analysis to determine if the differences observed are at all significant. If the differences are significant I should try to correlate the likelihood of interactions with a quantitative measure like average protein identity.

References

[1]Qin H, Lu HH, Wu WB, Li WH. Evolution of the yeast protein interaction network. Proc Natl Acad Sci U S A. 2003 Oct 28;100(22):12820-4. Epub 2003 Oct 13

[2]Reguly T, Breitkreutz A, Boucher L, et al. Comprehensive curation and analysis of global interaction networks in Saccharomyces cerevisiae. J Biol. 2006 Jun 8;5(4):11 [Epub ahead of print]

Tags: protein interactions, evolution, networks

Sunday, June 25, 2006

Quick links

I stumbled upon a new computational biology blog called Nature's Numbers, looks interesting.

From Science Blogs universe here is a list compiled by Coturnix of upcoming blog carnivals for the next few days. I also remind anyone reading that the deadline for submissions for bio::blogs is coming very soon so send in your links :).

Still in Science Blogs here is an introduction to information theory. I am getting interesting in this as a tool for computational biology but I have a lot to learn on the subject. Here are two papers I fished out that use information theory in biology.

Also, if you want to donate some money, go check out the donors choose challenge of several Science Bloggers. Seed will match the donations up to $10,000 making each donation potentially more useful.

I stumbled upon a new computational biology blog called Nature's Numbers, looks interesting.

From Science Blogs universe here is a list compiled by Coturnix of upcoming blog carnivals for the next few days. I also remind anyone reading that the deadline for submissions for bio::blogs is coming very soon so send in your links :).

Still in Science Blogs here is an introduction to information theory. I am getting interesting in this as a tool for computational biology but I have a lot to learn on the subject. Here are two papers I fished out that use information theory in biology.

Also, if you want to donate some money, go check out the donors choose challenge of several Science Bloggers. Seed will match the donations up to $10,000 making each donation potentially more useful.

Wednesday, June 21, 2006

Journal policies on preprint servers

I mentioned in a previous post that it would be interesting to separate the registration, which allows claims of precedence for a scholarly finding (the submission of a manuscript) from the certification, which establishes the validity of a registered scholarly claim (the peer review process).

This can only happen if journals accept that a manuscript submitted to a preprint server is different from a peer-review article and therefore it should not be considered as prior publication. So what do the journals currently say about preprint servers ? I looked around the different policies, sent some emails and compiled a this list:

Nature: yes but ...

I enquired about this last part of their policy on the peer review forum and this was the response:

Nature Genetics/Nature Biotechnology: yes

PNAS: Yes!

BMC Bioinformatics/BMC Biology/BMC Evolutionary Biology/BMC Genomics/BMC Genetics/Genome Biology: Yes

Molecular Systems Biology: Do you feel lucky ?

Genome Research: No

Science: Do you feel lucky ?

Cell: No ?

PLoS - No clear policy information on the site about this but according to an email I got from PLoS they do consider for publication papers that have been submited in preprint servers. I hope they could make this clear in the policies they have available online.

Bioinformatics,Molecular Biology and Evolution - ??

I sent emails to both journals but I only had an answer from MBE directing me to this policy common to the journals of the Oxford University Press.

In summary most journals I checked will consider papers that have been previously submited to preprint servers, so I might consider in the future to submit my own work to preprint servers before looking for a journal. Very few journals clearly refuse manuscripts that might be available in electronic form but a good number either have no clear policy or reserve the right to reject papers that are available online.

I mentioned in a previous post that it would be interesting to separate the registration, which allows claims of precedence for a scholarly finding (the submission of a manuscript) from the certification, which establishes the validity of a registered scholarly claim (the peer review process).

This can only happen if journals accept that a manuscript submitted to a preprint server is different from a peer-review article and therefore it should not be considered as prior publication. So what do the journals currently say about preprint servers ? I looked around the different policies, sent some emails and compiled a this list:

Nature: yes but ...

Nature allows prior publication on recognised community preprint servers for review by other scientists in the field before formal submission to a journal. The details of the preprint server concerned and any accession numbers should be included in the cover letter accompanying submission of the manuscript to Nature. This policy does not extend to preprints available to the media or that are otherwise publicised before or during the submission and consideration process at Nature.

I enquired about this last part of their policy on the peer review forum and this was the response:

"We are aware that preprint servers such as ArXiv are available to the media, but as things stand we consider for publication, and publish, many papers that have been posted on it, and on other community preprint servers.As long as the authors have not actively sought out media coverage before submission and publication in Nature, we are happy to consider their work."

Nature Genetics/Nature Biotechnology: yes

(...)the presentation of results at scientific meetings (including the publication of abstracts) is acceptable, as is the deposition of unrefereed preprints in electronic archives.

PNAS: Yes!

"Preprints have a long and notable history in science, and it has been PNAS policy that they do not constitute prior publication. This is true whether an author hands copies of a manuscript to a few trusted colleagues or puts it on a publicly accessible web site for everyone to read, as is common now in parts of the physics community. The medium of distribution is not germane. A preprint is not considered a publication because it has not yet been formally reviewed and it is often not the final form of the paper. Indeed, a benefit of preprints is that feedback usually leads to an improved published paper or to no publication because of a revealed flaw. "

BMC Bioinformatics/BMC Biology/BMC Evolutionary Biology/BMC Genomics/BMC Genetics/Genome Biology: Yes

"Any manuscript or substantial parts of it submitted to the journal must not be under consideration by any other journal although it may have been deposited on a preprint server."

Molecular Systems Biology: Do you feel lucky ?

"Molecular Systems Biology reserves the right not to publish material that has already been pre-published (either in electronic or other media)."

Genome Research: No

"Submitted manuscripts must not be posted on any web site and are subject to press embargo."

Science: Do you feel lucky ?

"We will not consider any paper or component of a paper that has been published or is under consideration for publication elsewhere. Distribution on the Internet may be considered prior publication and may compromise the originality of the paper or submission. Please contact the editors with questions regarding allowable postings under this policy."

Cell: No ?

"Manuscripts are considered with the understanding that no part of the work has been published previously in print or electronic format and the paper is not under consideration by another publication or electronic medium."

PLoS - No clear policy information on the site about this but according to an email I got from PLoS they do consider for publication papers that have been submited in preprint servers. I hope they could make this clear in the policies they have available online.

Bioinformatics,Molecular Biology and Evolution - ??

"Authors wishing to deposit their paper in public or institutional repositories may deposit a link that provides free access to the paper, but must stipulate that public availability be delayed until 12 months after first online publication in the journal"

I sent emails to both journals but I only had an answer from MBE directing me to this policy common to the journals of the Oxford University Press.

In summary most journals I checked will consider papers that have been previously submited to preprint servers, so I might consider in the future to submit my own work to preprint servers before looking for a journal. Very few journals clearly refuse manuscripts that might be available in electronic form but a good number either have no clear policy or reserve the right to reject papers that are available online.

Tags: science, peer review, preprint servers

Monday, June 19, 2006

Mendel's Garden and Science Online Seminars

For those interested in evolution and genetics this is a good day. The first issue of Mendel's Garden is out with lots of interesting links. I particularly liked RPM's post on evolution of regulatory regions. I still think that evo-devo should focus a bit more on changes in protein interaction networks but more about that one of these days (hopefully :).

On a related note, Science started a series of online seminars with a primer on "Examining Natural Selection in Humans". This is a flash presentation with voice overs from the authors of a recent Science review on the same subject. I like this idea much more than the podcasts. I am not a big fan of podcasts because it is much faster to scan a text than it is to hear someone read it for you. At least with images there is more information and more appeal to spend some minutes listening to a presentation. The only thing I have against this Science Online Seminars initiative is that there is no RSS feed (I hope it is just a matter of time).

For those interested in evolution and genetics this is a good day. The first issue of Mendel's Garden is out with lots of interesting links. I particularly liked RPM's post on evolution of regulatory regions. I still think that evo-devo should focus a bit more on changes in protein interaction networks but more about that one of these days (hopefully :).